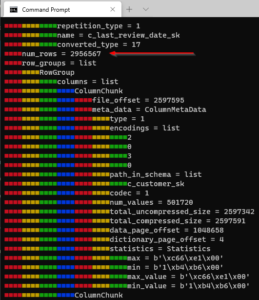

This enables you to save data transformation and enrichment you have done in Redshift into your S3 data lake in an open format. The Parquet format is up to 2x faster to unload and consumes up to 6x less storage in S3, compared to text formats. Not all options are guaranteed to work as some options might conflict. You can now unload the result of a Redshift query to your S3 data lake in Apache Parquet format. extraunloadoptions: No: N/A: Extra options to append to the Redshift UNLOAD command.

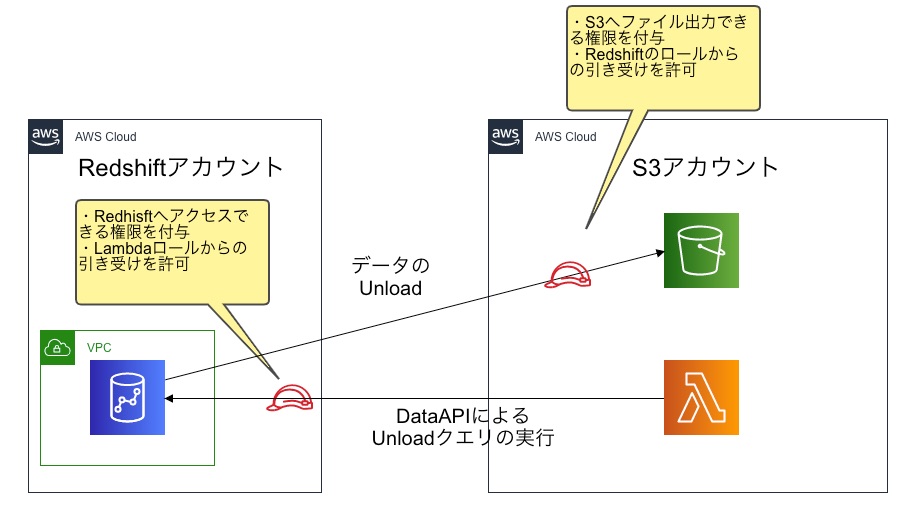

Valid options are Parquet and Text, which specifies to unload query results in the pipe-delimited text format. Choose Policies, and then choose Create policy.ģ. You can unload tables with SUPER data columns to Amazon S3 in the Parquet format. unloads3format: No: Parquet: The format with which to unload query results. Create an IAM role in the account that's using Amazon S3 (RoleA)Ģ. If they're in different Regions, then you must add the REGION parameter to the COPY or UNLOAD command.

REDSHIFT UNLOAD TO S3 PARQUET DRIVERS

The Data API simplifies access to Amazon Redshift by eliminating the need for configuring drivers and managing database connections. Note: The following steps assume that the Amazon Redshift cluster and the S3 bucket are in the same Region. The Amazon Redshift Data API simplifies data access, ingest, and egress from programming languages and platforms supported by the AWS SDK such as Python, Go, Java, Node.js, PHP, Ruby, and C++. For example, if you're using the Parquet data format, your syntax looks like this: copy table_name from 's3://awsexamplebucket/crosscopy1.csv' iam_role 'arn:aws:iam::Amazon_Redshift_Account_ID:role/RoleB,arn:aws:iam::Amazon_S3_Account_ID:role/RoleA format as parquet Resolution UNLOAD automatically encrypts data files using Amazon S3 server-side encryption (SSE-S3). However, there might be some changes in the COPY and UNLOAD command syntax while performing these operations. Note: These steps work regardless of your data format. Test the cross-account access between RoleA and RoleB. Parquet format is up to 2x faster to unload and consumes up to 6x less storage in Amazon S3, compared with text formats. You can unload the result of an Amazon Redshift query to your Amazon S3 data lake in Apache Parquet, an efficient open columnar storage format for analytics. You can unload the result of an Amazon Redshift query to your Amazon S3 data lake in Apache Parquet, an efficient open columnar storage format for analytics. It uses Amazon S3 server-side encryption. Create RoleB, an IAM role in the Amazon Redshift account with permissions to assume RoleA.ģ. My customer has a 2 - 4 nodes of dc2.8 xlarge Redshift cluster and they want to export data to parquet in the optimal size (1GB) per file with option. Amazon Redshift unload command exports the result or table content to one or more text or Apache Parquet files on Amazon S3.

Create RoleA, an IAM role in the Amazon S3 account.Ģ. Unloads the result of a query to one or more text, JSON, or Apache Parquet files on Amazon S3, using Amazon S3 server-side encryption (SSE-S3). These steps apply to both Redshift Serverless and Redshift provisioned data warehouse:ġ. To access Amazon S3 resources that are in a different account from where Amazon Redshift is in use, perform the following steps.

0 kommentar(er)

0 kommentar(er)